- AI Infrastructure

- Magnificent 7

- Semiconductors

Nvidia at $5 Trillion: Power Takes the Stage in Washington

9 minute read

How a semiconductor company transformed itself into the indispensable infrastructure of American technological sovereignty and what it means for the decade ahead.

Key Takeaways

-

Nvidia reaches a $5 trillion valuation, redefining corporate scale and becoming the cornerstone of U.S. and allied AI infrastructure.

-

Washington emerges as the new seat of tech power, as Nvidia partners with Palantir, Nokia, and U.S. federal agencies to integrate AI across defense, telecom, and national compute systems.

-

Data Center revenue climbs to $41.1 billion, now 88% of total sales, solidifying Nvidia’s control over the hardware and software stack powering the global AI economy.

Introduction

WASHINGTON — When Jensen Huang closed his keynote at Washington’s Walter E. Washington Convention Center on October 28 with Donald Trump’s “Make America Great Again” slogan, the gesture transcended political theater. Nvidia’s chief executive was articulating a thesis that has, in thirty months, elevated his company to a $4.89 trillion valuation—briefly crossing $5 trillion in after-hours trading. The message was crystalline: artificial intelligence infrastructure is no longer corporate strategy. It is national security architecture.

The decision to relocate GTC—Nvidia’s annual developer conference, traditionally anchored in San Jose—to the nation’s capital represents the clearest signal yet of the company’s evolution from chipmaker to geopolitical actor. This shift was deliberate, timed to engage federal policymakers, defense officials, and international allies at a juncture when U.S. compute capacity underpins everything from military simulations to economic competitiveness. Huang had anticipated an appearance by President Trump, whose administration’s emphasis on domestic manufacturing and energy security aligns closely with Nvidia’s roadmap. Trump, committed to a diplomatic tour across Asia including planned discussions with Xi Jinping on trade and technology curbs, sent regrets. Despite the absence, Huang lauded Trump’s initiatives, crediting them for accelerating U.S.-based Blackwell production in Arizona and fostering the policy environment enabling AI’s industrial scale-up.

This was not a product launch masquerading as policy engagement. It was policy engagement substantiated by product capability, delivered to an audience primed for demonstrations of how semiconductors fortify sovereignty.

The Economics of Inevitability

Strip away the pageantry and what remains is financial performance that defies the gravitational pull typically exerted on companies approaching $5 trillion. Nvidia’s second-quarter fiscal 2026 results—$46.7 billion in revenue, up 56% year-over-year and 6% sequentially—would be remarkable for a firm one-tenth its size. That the Data Center segment now commands 88% of revenue, generating $41.1 billion in a single quarter, suggests not market dominance but market definition. Nvidia is not winning a race. It is laying the track.

Gaming contributed $4.3 billion, up 49% annually. Automotive and robotics added $0.6 billion, surging 69%. Professional visualization held at $0.6 billion with 32% growth. Non-GAAP gross margins reached 72.7%, bolstered by production efficiencies and Blackwell’s early momentum, with Q3 guidance targeting 73.5%.

The forward price-to-earnings ratio of 30, set against projected earnings-per-share growth of 44%, presents an unusual proposition: this company may actually be undervalued. Traditional valuation frameworks struggle here because they assume competitive dynamics that do not yet exist. AMD’s MI300 series remains a distant second, capturing perhaps 10-15% of incremental AI GPU demand. Intel’s Gaudi accelerators have gained negligible traction, hampered by software ecosystem deficits. Hyperscaler custom silicon—Google’s TPUs, Amazon’s Trainium—serves specific workloads but cannot replicate Nvidia’s full-stack integration of hardware, software, and ecosystem network effects.

Trailing metrics underscore the disparity: Nvidia’s trailing P/E stands at 53.81, price-to-book at 45.93, yet these reflect a business where 24.35 billion shares outstanding underpin a trajectory detached from cyclical semiconductor norms.

This is the economic expression of architectural lock-in. CUDA, Nvidia’s parallel computing platform, has become the lingua franca of AI development. More than three million developers work within its environment. NVLink interconnects enable the chip-to-chip bandwidth required for frontier model training—up to 900 gigabytes per second in Blackwell configurations. The switching costs are not merely financial; they are temporal and organizational. Retraining research teams, rewriting codebases, re-optimizing inference pipelines could set a hyperscaler back eighteen months—an eternity in a sector where competitive advantage is measured in weeks.

Q3 revenue guidance of $54 billion (±2%), implying 54% year-over-year expansion, embeds these dynamics even as U.S. export restrictions on China—historically 20-25% of sales—curtail H20 chip contributions to zero in Q2 after a $180 million inventory reserve.

Blackwell and the Infrastructure Thesis

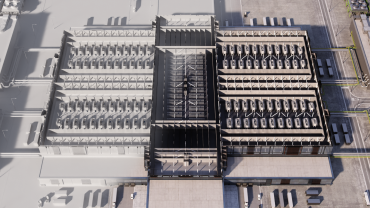

The Blackwell platform, which saw 17% quarter-over-quarter deployment growth in Q2, exemplifies Nvidia’s transition from component supplier to systems integrator. This is not a GPU. It is an architecture for what Huang terms “AI factories”—facilities consuming 100 megawatts initially, scaling to multi-gigawatt campuses that rival the power draw of small cities.

Omniverse DSX, unveiled during the Washington keynote, layers power orchestration (DSX Flex for dynamic grid management), performance tuning (DSX Boost for workload optimization), and operational convergence (DSX Exchange for IT-OT unification) onto this foundation. BlueField-4 data processing units, combining 64-core Grace CPUs with ConnectX-9 networking, function as the operating system for these installations, delivering six times the compute throughput of predecessors.

The implications extend beyond corporate data centers. Nvidia and Oracle will deploy seven Department of Energy supercomputers, including Solstice at Argonne National Laboratory—100,000 Blackwell GPUs delivering 2,200 exaflops for nuclear weapons simulation, climate modeling, and materials science—and Equinox with an additional 10,000 GPUs.

Two marquee commercial partnerships illustrate the platform’s reach. Nvidia’s $1 billion equity investment in Nokia anchors a collaboration to develop AI-native 6G telecommunications infrastructure—the Aerial RAN computer that embeds Nvidia chips and software into base stations. Analysts project the AI-RAN market will exceed $200 billion cumulatively by 2030, and T-Mobile will conduct field trials beginning in 2026. Huang positioned the deal as strategic reclamation: “Our fundamental communication network is based on foreign technologies. That has to stop.”

Equally consequential is the Palantir integration. Nvidia’s GPU-accelerated computing, CUDA-X libraries, and Nemotron reasoning models now embed within Palantir’s Ontology framework—the data layer at the core of its AI Platform. This creates what both companies term “operational AI”: systems that automate complex enterprise and government workflows by creating digital replicas of supply chains, logistics networks, and operational processes. Lowe’s is the pilot customer, building a virtual twin of its global distribution network for dynamic optimization. For Nvidia, the partnership extends its addressable market beyond pure compute into decision intelligence and agentic automation. For Palantir, it transforms the platform from software into full-stack AI orchestration running on Blackwell architecture.

Additional partnerships reinforce ecosystem breadth: CrowdStrike embeds Nvidia AI agents for real-time cybersecurity; Uber commits to 100,000 DRIVE AGX Hyperion-powered robotaxis by 2027; automotive manufacturers including Lucid, Mercedes-Benz, and Stellantis adopt Level 4 autonomous systems.

This is infrastructure in the classical sense: assets with decades-long lifespans, underwriting national capability in domains where computational superiority translates directly to strategic advantage.

The quantum integration via NVQLink further illustrates the company’s temporal arbitrage. Achieving four-microsecond latencies between quantum processors and GPUs through CUDA-Q positions Nvidia at the intersection of two exponential curves, already engaging every U.S. DOE laboratory. Even if quantum computing remains five or ten years from commercial viability, Nvidia will have already embedded itself as the essential middleware—the layer that translates quantum operations into classically useful outputs.

The Geopolitical Hedge

Export controls targeting China have removed what was historically 20-25% of Nvidia’s revenue. Yet the stock trades at all-time highs. The market is signaling that geopolitical alignment now carries a premium that offsets lost Chinese revenue—perhaps exceeds it.

This calculation rests on three pillars. First, domestic demand from U.S. hyperscalers and enterprise customers has absorbed the shortfall, supported by capital expenditure guidance exceeding $1 trillion annually through 2030 across the sector. Second, allied markets—European Union, Japan, South Korea, Australia—are expanding as governments prioritize compute self-sufficiency and seek alternatives to Chinese supply chains. Third, and most consequentially, Nvidia has positioned itself as the primary beneficiary of federal AI spending, defense modernization, and CHIPS Act extensions, with DOE contracts exemplifying the pivot.

The Washington venue choice was not symbolic. It was transactional. By aligning product roadmaps with federal priorities—domestic manufacturing, energy grid integration, nuclear power renaissance—Nvidia has transformed regulatory risk into regulatory advantage. Expedited approvals, subsidies, and preferential access to government contracts become more likely when a company can credibly claim to be architecting American technological hegemony.

Huang’s effusive praise for Trump, despite the president’s absence, underscores this strategy. The political vector matters less than the bipartisan consensus that AI infrastructure is strategic infrastructure. Nvidia has succeeded in making its corporate interests indistinguishable from national interests—a feat last achieved at this scale by defense primes during the Cold War.

Risks and the Margin of Safety

Two threats warrant attention. The first is hyperscaler vertical integration. If Amazon, Microsoft, Google, and Meta achieve sufficient internal capability with custom silicon, Nvidia’s pricing power erodes. The counter is that training foundation models—the computationally intensive workloads where Nvidia reigns—will remain dominated by general-purpose GPUs for the foreseeable future. Inference workloads may migrate to ASICs, but training is where margins live, sustaining 70%+ gross rates.

The second is valuation reversion. At $4.89 trillion, Nvidia is priced for flawless execution. Any stumble in Blackwell yields, delays in next-generation architectures like Rubin, or macroeconomic shocks that curtail capital spending could trigger sharp corrections. The forward P/E of 30 provides cushion, but only if growth trajectories hold. November’s Q3 earnings will clarify whether $54 billion in guided revenue was conservative or aspirational, particularly as Blackwell ramps and non-China sales offset restrictions.

Yet even these risks carry nuance. Hyperscaler ASICs validate the market Nvidia created; they do not obviate it. And valuation concerns assume static business models. Nvidia is no longer simply selling chips. It is monetizing an entire stack—hardware, software, networking, support—at gross margins above 70%. This is Apple’s playbook applied to enterprise infrastructure, with trailing twelve-month revenue now exceeding $165 billion.

The Inflection Unfolding

Nvidia’s ascent to $5 trillion represents more than market capitalization milestones. It marks the point at which AI transitions from experimental technology to foundational infrastructure—the electricity or telecommunications of the 21st century. The capital intensity is staggering: terawatt-scale power demands, multi-gigawatt data centers, nuclear plant construction to meet baseload requirements. These are investments with 30-year horizons, locking in architectural choices made today. Hyperscalers alone plan $100-200 billion in annual AI capex, much of it flowing to Nvidia’s ecosystem, while enterprises awaken to agentic AI’s inference needs.

For policymakers, the lesson is that compute capacity is now a strategic resource, subject to the same logic that governs oil reserves or rare earth minerals—vulnerable to scarcity, hoarding, and alliances. Washington’s GTC debut signals a new geography of power, where silicon fabs in Arizona and Texas rival shipyards in strategic import.

For investors, the implication is that Nvidia’s moat—technical, economic, and increasingly political—may prove wider than current multiples suggest, with sovereign AI deals in Europe and Asia providing diversification. And for competitors, the window to challenge this dominance is closing, if it has not already shut. Ecosystem effects compound faster than hardware iterations.

Huang’s declaration that “The Age of AI has begun” is both marketing and macroeconomic forecast. Nvidia has built the infrastructure for that age—from CUDA’s developer base to NVQLink’s quantum bridge—positioning itself as the quartermaster of progress. Whether it can sustain this position will define not just a company’s trajectory, but the shape of technological power for the generation ahead, as AI factories reshape grids, economies, and alliances in equal measure.