- AI

- Artificial Intelligence

- Cybersecurity

API Wars Have Been Declared

15 minute read

Inside The Silent Arms Race to Reverse-Engineer AI Models Through Test Queries

Earlier this year OpenAI made a startling allegation: that it had detected Chinese startup DeepSeek using what the company called a “distillation pattern”: millions of short, low-temperature prompts fired at GPT-4 to siphon off its answers for use in training a cheaper clone. Although DeepSeek denied deliberate wrongdoing, industry insiders said the incident is one of the most surprising cases yet of a start-up reverse-engineering a frontier model through aggressive API probing.

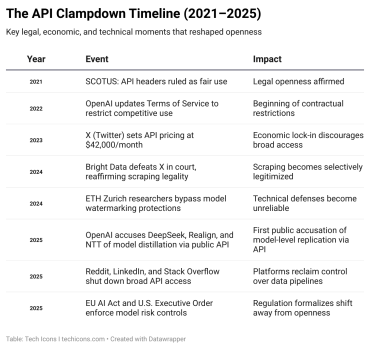

The DeepSeek affair came after a different kind of API confrontation. Under Elon Musk, X began charging up to $42 000 per month for full-fire-hose API access to academic researchers, who had previously enjoyed privileged access for far lower costs.

In the world of open APIs, copying isn’t theft. It’s timing.

Well before those corporate flare-ups, academics had already proved the feasibility of “black-box” model extraction. A 2024 pre-print from researchers at a number of Hong Kong universities introduced the LoRD (Locality-Reinforced Distillation) attack, showing that with fewer than 100 carefully crafted queries, a team could transfer task-specific skills from a 175-billion-parameter “teacher” into an eight-billion parameter “student” that kept 95 % of the original accuracy. The authors explained in the paper they had no privileged access, just the public text any paying customer receives. LoRD and its successors are now the go-to blueprints for cash-constrained startups, proving that a single night of probe-flooding can compress years of R&D into hours of GPU time.

From Slack to Scripts

Industrial espionage isn’t new, nor is it uncommon. One of the most eye-popping examples of alleged corporate spying has surfaced in recent months, with HR tech firm Rippling alleging Deel orchestrated a “multi-month campaign to steal a competitor’s business information with help from a corporate spy.” The supposed spy “obsessively and systematically accessed Slack channels where he had no legitimate business interest,” conducting more than 6,000 searches, and was caught, Rippling said in a court document, after the firm laid a trap. Deel denies the allegations.

Yet allegedly burying employees deep within a business’s corporate infrastructure is a labour-intensive, high-wire act that can go catastrophically wrong if caught. Instead, it’s now possible to do small-scale spying through open API doors, analysing how a business is operating, and its main products work, through public interactions with test queries. Software has always been built on imitation. What’s new is the gigantic leverage that a single night of probe-flooding can buy a business, and how that fits into the scrappy, resourceful and always eager startup psyche that typifies so many young businesses in the tech world.

No startup is unique for long, with popular features and products often copied and adapted as soon as they reach a critical mass of popularity and use among their customers by competitors eager to muscle in on the competition.

Industrial espionage didn’t vanish— it just started using better tools.

Using public API access to probe and prod at a competitor’s software and products is an open secret in the tech sector, says Andrey Korchak, former chief technology officer and co-founder of Monite, a startup. Indeed, competitor analysis is a large part of due diligence when setting up a business.

“Reverse engineering, OSINT, documentation analysis, and other similar techniques have been around for decades,” Korchak says. “If a company runs a profitable software product in a large market, customers will eventually analyse its product, documentation, inputs, and outputs.”

And in the 1980s, Fujitsu was ordered to pay IBM hundreds of millions of dollars in licensing fees a judge felt the company was owed after IBM accused the Japanese company of copying extensive portions of its MVS and VM operating system code while building IBM-compatible mainframes.

That longstanding tradition means anyone setting up a company ought to come to several revelations, and be comfortable with them. “By default, when you publish software, you should expect that others will examine it at some point,” he explains. “This remains true today – if your company creates software that is interesting enough, people will review and analyse it carefully.”

All’s Fair in Love, Code, and Copying

Companies draw on a well-honed operational playbook. First come “ghost” developer accounts, sometimes registered with burner e-mails, and often sprinkled across different IP ranges to evade geofencing. A single scraping script can cycle through hundreds of such identities, keeping below the per-minute rate limits of every individual key while still hammering the target service with thousands of calls an hour. The next step can involve headless browsers and proxy pools, which rotate fingerprints and defeat CAPTCHA challenges. Finally, the harvested responses feed into private dashboards that tracks latency, error codes and subtle shifts in behaviour, raising an alert the moment a competitor ships a new parameter or output style.

The real product isn’t the model. It’s the ability to replicate it faster than the next guy.

Data scraping isn’t new, but it is controversial. X has previously tried, unsuccessfully, to sue a company that offers it, called Bright Data. After winning the 2024 court case, Bright Data said the judgement showed “belongs to all of us, and any attempt to deny the public access will fail.”

As James Bent, vice president of engineering at Virtuoso QA, specialists in AI testing, puts it, “although I’d like to think that there’s some ethics and pride which says we’ll build what we design and originate, versus just copying, that’s not really how the world works. All’s fair in love and war – and building product.”

The ability to call on APIs and bombard them with test queries to get organisations to give up their trade secrets is simply the way of the world now, agrees Joe Davies, from SEO experts fatjoe. “What used to be market research is now a game of digital cat and mouse, with startups reverse-engineering each other’s models through test queries and API access,” he says. Davies points out that the practice is often seen as standard for those in the early stages of growing their business. “Startups often benchmark competitors by sending tailored prompts to public APIs, tracking patterns in tone, formatting, and even logic pathways,” he explains. “Some go further, building internal dashboards that monitor outputs in real-time, or deploying ghost users and automated crawlers to mimic real usage at scale,” he adds. “It takes more than just mere observation; it’s active decoding.

Law, Ethics, and the Blur in Between

Platforms’ own contracts and terms of service attempt to draw an unequivocal line in the sand about the legitimacy of such action. OpenAI’s public terms of use forbid any customer to “use Output to develop models that compete with OpenAI,” explicitly classing automated data extraction as a breach that can lead to immediate termination. Anthropic’s commercial terms go further, barring customers from “access[ing] the Services to build a competing product or service… [or] reverse engineer[ing] or duplicate the Services” without express written approval. In practice these clauses are enforceable only when the scrapers reveal their true identity, which is precisely what many strategies are designed to avoid.

Whatever analysis and insights can be gleaned from repeated API calls is somewhat limited in its scope, says Korchak. “Most likely, external users will not be able to extract any critical information from public documentation and APIs,” he believes “However, they can piece together parts of the business logic or basic implementation details. Even these small insights can help competitors take shortcuts in their tech strategies.” And generative AI shifts the level at which this can be done, bombarding target software with queries that allow competitors to build up, piece by piece, a view of what exactly it’s doing and how – the secrets to its success.

AI can help produce additional data for competitor datasets, says Korchak, and can enter prompts, gathering responses that can then be used for AI analysis and benchmarking. “Although providing training sets to your own competition might be unpleasant, it barely changes the overall market balance,” he says.

Legal boundaries mean little when engineering incentives run deep.

Debate has roiled over the morality and legality of sending test queries to competitors’ tools to try and reverse-engineer what makes them tick. Legal precedent offers little clarity. In 2021 the U.S. Supreme Court sided with Google in its decade-long clash with Oracle, ruling that Google’s re-implementation of the Java API headers for Android was fair use, a decision hailed by the software industry as an endorsement of interoperability. Yet the opinion underlined that Google had copied only the interface, not the underlying code or outputs, leaving open the question of whether cloning a model’s behavioral quirks crosses a different line.

Reverse-engineering is also partially shielded by statute. Section 1201(f) of the U.S. Digital Millennium Copyright Act permits circumvention “for the sole purpose of identifying and analysing those elements… necessary to achieve interoperability.” Europe’s Directive 2009/24/EC provides a similar decompilation exemption in Article 6. But both frameworks condition the defence on interoperability, not competitive cloning, a distinction that litigants are only now beginning to test.

The activity sits in a grey area, believes Korchak, and can be equally validly described as simple research or intellectual theft, depending on your point of view. His view? “If APIs are public and an engineer does not intentionally break the service, cause a denial of service, or violate the API provider’s terms and conditions, then it is simply research,” he says. “If any of these issues occur, it becomes a legal violation. This is not a grey area – there are clear rules and regulations.”

Still, the practice is questionable, says Davies. “While no laws explicitly forbid querying a public API, copying a model’s structure or training your own on scraped outputs walks a fine line between competitive research and intellectual theft,” he says. “Without clearer regulations, it’s a Wild West for data ethics.”

Everything Leaks

Those defences increasingly revolve around watermarking. In late 2024 ETH Zürich security researchers demonstrated a $50 “watermark-stealing” attack that could forge DeepMind’s SynthID-Text marks from only a few thousand black-box queries, allowing attackers to generate unlimited spoofed text that still passes an origin check. That in turn spurred providers to rotate watermark keys more frequently, raising implementation costs and irritating legitimate customers who rely on deterministic outputs.

In response, startups often throw up barriers and defences to try and stop the issue happening. Some software providers also choose to explicitly restrict using their products for parsing or analysis as a way to try and head off any incursions before they begin. “We’re seeing output watermarking, usage throttling, and even ‘noisy’ model behaviors to throw off reverse engineers,” says Davies. “But these countermeasures are only fueling the espionage arms race: proof that in the post-open-source world, transparency is a liability.” The implementation of those restrictions has been in place long before the rise of AI but is made more important thanks to the ease with which you can blast out repeated queries using AI tools – and particularly agentic AI.

You don’t need a breach when the protocol leaks by design.

Economic friction is another blunt instrument. When X priced its enterprise API at $42,000 per month in March 2023 – up from near-zero for academic research – dozens of open-source monitoring projects simply shut down, and data brokers passed the cost on to venture-funded clients. The academic outcry was enormous from the massive price hike. Critics argued that the paywall was less about revenue than about starving potential competitors of a vital stream of conversational data. And Reddit has also decided to turn API access into a pay-to-play enterprise, recognising the value in the data it holds on to its users, while websites have thrown up barriers to prevent AI scrapers from accessing their data, instead holding out for payment in exchange for giving up access.

Throwing up those hurdles in the way of people trying to get hold of this information is all well and good. But there’s a stark reality that any perceived victims need to recognise: “It is very difficult to take legal action and enforce terms and conditions on users,” says Korchak. “Only technical measures, such as blacklisting or firewall restrictions, can effectively stop users from analysing APIs with AI or other tools.”

The Moat Is Not the Model

That all means that competitive advantage has gone by the wayside, replaced by rapid redesigns and mimicking of what proves successful by competitors. “Very few people can claim to have or hold onto first mover advantage anymore,” says Virtuoso QA’s Bent. He doesn’t blame businesses for engaging in the tactic – and highlights the same challenges around morality and legality that Korchak identified. “You could argue that if the information is publicly available then I can go to a public API and scrape it,” says Bent. “Even if I’m using AI or other tools which make that much, much easier, is it legally wrong?”

Bent doesn’t necessarily have an answer to the question but suggests people will likely divide equally down two sides: “Is it wrong or is it just the nature of a competitive market?” he asks. That reality underscores a broader industry shift. Building glitzy features is no longer enough; the moat increasingly lies in distribution, partnerships and community, which are all assets that can’t simply be scraped and repurposed as they want. The tug-of-war between openness and secrecy will therefore continue, but companies that give users more trust, and lock them into their ecosystem, may prove more resilient than those who rely on transient technical edges alone.

What scales isn’t just code — it’s trust, positioning, and control

How businesses ought to react to the new competitive reality where your idea can be swept from beneath you with a raft of API calls is another challenge. But Bent believes that it’s worth considering the issue from another lens. “You could also argue that the power is not necessarily in what you have, but in what you intend to build upon in your product,” he says. “As anybody selling product knows, most products will do the job, but it’s also about how you sell, enable, support, partner and go to market. All of those things are completely independent of the product itself.” Focusing more on those, more than on the risks of someone sniffing around the inner workings of your business, may be the answer to staying ahead of snooping competitors.

The big unknown is what, if anything, can be done to try and mitigate the ongoing API wars now they’ve been declared. “To be completely fair, this happens in every scenario,” Naveen Rao, vice president of AI at Databricks, told Reuters when the scale of DeepSeek’s alleged API activities were first uncovered. “Competition is a real thing, and when it’s extractable information, you’re going to extract it and try to get a win,” he said. “We all try to be good citizens, but we’re all competing at the same time.”