Powered by AI. Triggered by Employees.

For years, the term “insider threat” has been defined as a cybersecurity risk emerging from the potential for an employee to use their authorized access to deliberately cause harm to an organisation and the people who work there.

However, the unprecedented rise of generative AI tools such as ChatGPT and Claude is leading to a new—unintentional—insider threat: employees feeding sensitive data into these tools, often without their employers’ knowledge.

The majority of organisations—more than 60%—are now using generative AI tools, a rapid increase from just 33% in 2023. While staggering, the real figure is likely even higher, as research shows that workers are often using AI tools at work without clearance from their employers.

Worse, new research from Harmonic Security, shared with TECH ICONS ahead of publication, reveals that the use of generative AI tools in enterprise, whether with or without an employer’s knowledge, is leading to the exposure of sensitive information and presents a whole new cybersecurity threat for unprepared businesses.

Silent but deadly risk

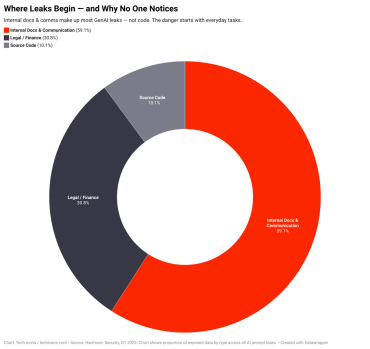

Harmonic’s research, which analysed more than 176,000 prompts and thousands of file uploads from a sample of 8,000 generative AI users, found that much of exposure relates to sensitive data including proprietary code and legal documents: exposure pertaining to legal and finance doubled to 30.8%, and sensitive code almost doubled to 10.1%.

“Employees are entering confidential data without a second thought,” Dr. Chad Walding, co-founder of NativePath, told TECH ICONS. “For instance, a lawyer could upload the contents of a non-disclosure agreement and submit confidential data, not realizing they are exposing confidential information”.

The new insider threat doesn’t break in — it’s invited in, one prompt at a time.

Dr. Anmol Agarwal, a professor at George Washington University specialising in AI and cybersecurity, added: “If a developer copies and pastes code into ChatGPT that contains company information, and another developer from another company asks the tool about a similar topic, then that other developer can also receive sensitive, confidential code and information.”

This is also a significant issue in the healthcare sector, which has shown an eagerness to embrace generative AI due to its bold promises of enhancing efficiency and effectiveness across the industry. 70% of healthcare organisations say they are pursuing or have already implemented gen AI capabilities—yet these same organisations often rely on a complex web of third-party vendors, many of whom don’t design products with security-first principles in mind.

“In environments where sensitive patient data is constantly in motion, even a small lapse can have life-threatening implications,” Justin Endres, head of data security at Seclore, told TECH ICONS. “These vulnerabilities are a silent but deadly risk to both organizations and the patients they serve.”

Unintentional exfiltration

The rise of generative AI use in the enterprise—and, subsequently, the increase in sensitive business information being fed into these tools—is widening the gap between innovation and security.

Many organisations still don’t know how to properly secure AI inputs, validate outputs, or detect adversarial behavior in real-world scenarios, and Harmonic’s research found that 55% of organisations lack formal governance structures related to AI tools.

This lack of awareness could be costly, as organizations that mishandle sensitive data through AI tools could face hefty legal penalties. For example, under Europe’s GDPR, breach-hit companies can be fined up to €20 million or 4% of their global annual turnover, while in the US, healthcare companies bound by HIPAA could be fined up to $1.5 million per year for each violation category related to data breaches For companies operating in the US there’s also California’s Privacy Rights Act (CPRA), which mandates stringent data handling processes.

AI tools don’t come with disclaimers, but they should. The wrong prompt can cost millions.

However, organizations’ unpreparedness is good news for attackers. While traditional hacking methods require extensive coding knowledge, the rise of new techniques such as prompt hacking democratizes the ability to exploit AI systems. This means even a novice can manipulate AI models for sensitive data exfiltration.

“AI is essentially an unintentional exfiltration point that could lead to serious consequences,” Endres told TECH ICONS. “There are faults in Gen AI models, and faults in how companies continue to adopt faster than their data security posture can keep up.”

Prohibition won’t work

While the use of generative AI tools in the enterprise has skyrocketed, only now are organizations beginning to recognize the hidden risks—violations of privacy, data exposure, and potential non-compliance with regulations that carry hefty fines.

Experts agree that, to counter this emerging threat, organisations need to implement clear AI-use policies that draw a hard line between what’s acceptable and what’s not. After all, there is no way to stop employees from using generative AI: Harmonic’s research found that more than 45% of data submissions came from personal accounts, suggesting most AI use bypasses IT oversight.

“Companies have been trying to prohibit Google Translate for a decade, but employees would continue using it,” Maria Sukhareva, principal key expert in AI at Siemens, told TECH ICONS. “There is no way to prohibit it; people will find a way to trick the system.

These clear-use policies need to be precisely that: clear. They need to set boundaries for staff regarding the appropriate and inappropriate use of AI, ensure compliance with relevant regulations and standards to prevent legal and ethical violations, and reinforce enterprise security policies, ensuring AI is not used to compromise sensitive information.

One organisation, London-based Law Firm MK Law, tells TECH ICONS that it has moved fast to implement an AI policy after it recognised the data exposure risk first-hand.

“It’s not a theoretical concern,” Marcus Denning, a senior lawyer at MK Law, told TECH ICONS. “At our company, we’ve had to formally reissue internal guidelines twice in the last six months after junior staff used AI tools to ‘summarize’ client letters,”

“Most policies nowadays are vague and unenforceable, but we did the reverse. Our AI-use framework is case-type specific. Litigation-related requests are forbidden, but marketing queries are logged and tracked.”

Looking beyond clear use

While an essential first step, organisations also need to look beyond clear-use AI policies, Nikos Maroulis, VP of AI at Hack The Box, tells TECH ICONS. He says that while CISOs are beginning to treat prompt injection and model misuse as insider threat scenarios—and as legal teams move to retrofit governance frameworks—they only matter if they hold up under pressure.

“Security teams weren’t trained for this, defensive strategies must evolve fast. That means upskilling both red and blue teams to understand how AI can be exploited and defended in practice,” he said. “Operationalising purple team exercises is key here. Simulating real-world AI attack scenarios strengthens collaboration between offensive and defensive teams, exposing blind spots and benchmarking cybersecurity performance in a controlled environment.

While generative AI presents significant advantages for efficiency and productivity, organisations across all sectors need to address the growing risk of data exposure before it’s too late. Without robust security measures, AI-driven efficiencies can quickly turn into data leak nightmares.

By the time you notice the leak, the damage is already systemic — legal, reputational, operational.

All organizations, regardless of size, need to protect data, not just to protect their own reputation, but to comply with legal requirements and maintain ethical standards. This is why a clear-use AI policy is a necessity for all; businesses need define acceptable use of AI tools, to ensure staff understand what types of data are off-limits and ensure security policies are followed to maintain compliance with data protection laws.

However, the hard work shouldn’t stop there. Organizations embracing generative AI also need to use tools, such as firewalls and access controls, to restrict sensitive data from being entered into public AI models. They also need to evolve traditional cybersecurity training to include generative AI attack simulations, helping teams to build their skills to defend against AI-specific threats such as prompt injection or output poisoning.

Without these robust security measures, AI-driven efficiencies can quickly turn into data leak nightmares.